Running Stable Diffusion with an Old GPU

Diffusion-based AI art is one of the hottest topics of 2022, and one of the most earth-shattering announcements of the past month has been the August 2022 open-sourcing of one of these SOTA (state-of-the-art) models known as Stable Diffusion. That’s right, mere months after Open AI releases their big potential cash cow DALLE-2 model to the world, a new largely privately funded org based out of the UK called Stability.AI open sources their model to the world out of nowhere. This was especially shocking as research groups at Google working on similar (likely superior systems) such as Imagen and Parti only released their whitepapers in May and June respectively, holding off on any public release of models due to the potential for harm (i.e.: generating explicit or politically sensitive content).

Under the hood, (and similar to DALLE-2), Stable Diffusion is built on top of the idea of diffusion - a class of algorithms that has largely displaced GANs in recent years for image generation. The model, in a nutshell, learns how to denoise an image of random noise conditioned on some sort of data. This data can be text prompts, but it can also be other images. This effectively enables novices to become “award winning digital artists” with *ahem* minimal effort.

As of 2022, Stable Diffusion joins Midjourney, DALLE-2, and Craiyon/DALLE-mini as an AI text to image generator you can use right now and is the only one for which content filters can be entirely disabled.

Running Stable Diffusion (Locally)

Note: Last updated 9/10/2022.

You can access a version of Stable Diffusion online via Hugging Face.

However, for the “real” enthusiasts who are interested in digging into the models, there’s no substitute for running the open sourced model on your own machine. There are currently many forks of the original github release, but the most feature rich repo, with a fully fledged GradioUI (with image editing and prompt matrix support) is the stable-diffusion-web-ui repo.

- Clone the stable-diffusion-web-ui repo (highly likely to be subject to change) and follow the install instructions for your platform. Installation options for systems with even very low VRAM should be available.

- Get the checkpoints here and copy into

models/ldm/stable-diffusion-v1 - Build docker image.

$ cp .env_docker.example .env_docker

# Edit the .env_docker file

$ docker compose up --build

- Open

http://localhost:7860in your browser

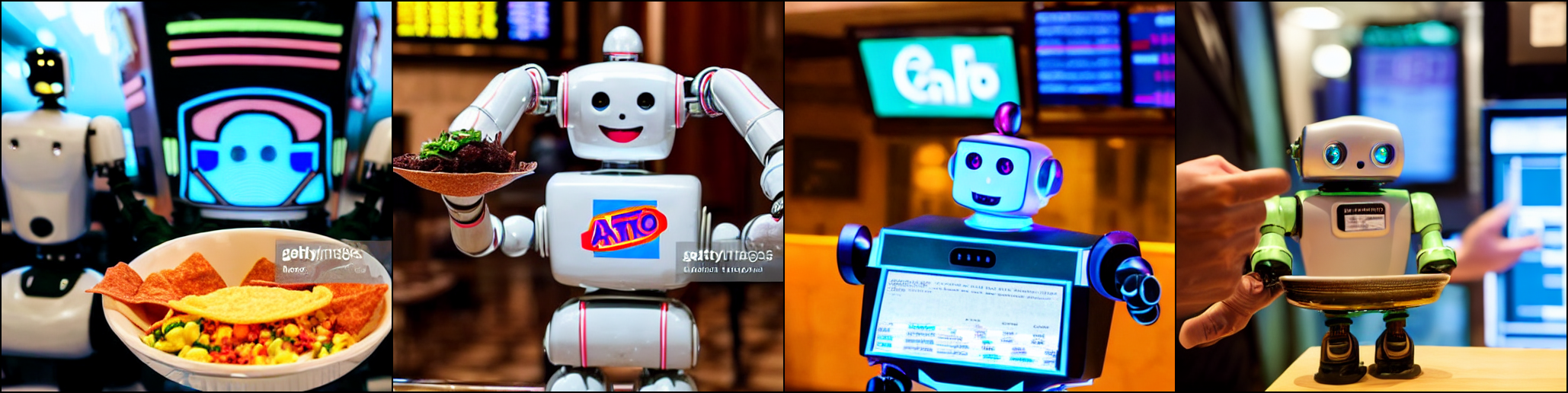

Here’s some pictures of “Robot holding a tacorito bowl inside the NYSE, stock photo” (check out that gettyImages watermark!)

Related Posts

Why Big Tech Wants to Make AI Cost Nothing

Earlier this week, Meta both open sourced and released the model weights for Llama 3.1, an extraordinarily powerful large language model (LLM) which is competitive with the best of what Open AI’s ChatGPT and Anthropic’s Claude can offer.

Read moreHost Your Own CoPilot

GitHub Co-pilot is a fantastic tool. However, it along with some of its other enterprise-grade alternatives such as SourceGraph Cody and Amazon Code Whisperer has a number of rather annoying downsides.

Read moreAll the Activation Functions

Recently, I embarked on an informal literature review of the advances in Deep Learning over the past 5 years, and one thing that struck me was the proliferation of activation functions over the past decade.

Read more