Stable Diffusion - De-painting with Stable Diffusion img2img

Stable diffusion has been making huge waves recently in the AI and art communities (if you don’t know what that is feel free to check out this earlier post).

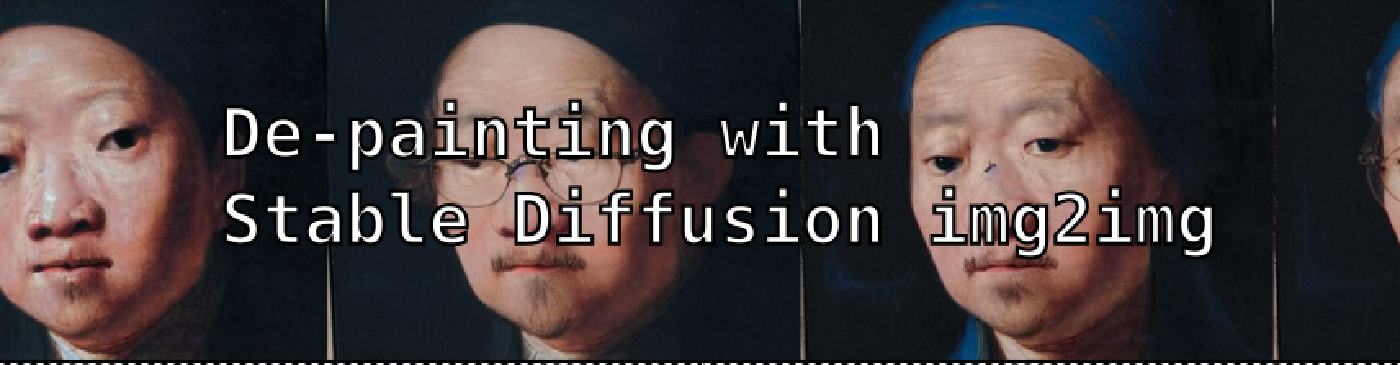

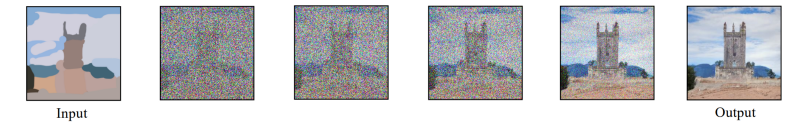

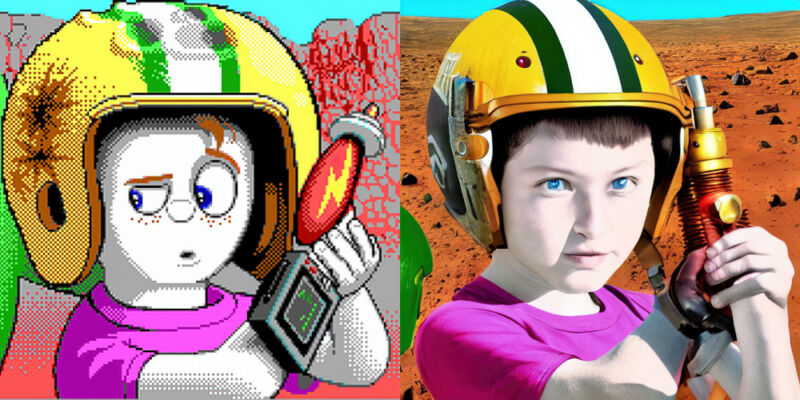

One of the most amazing features is the ability to condition image generation from an existing image or sketch. Given a (potentially crude) image and the right text prompt, latent diffusion models can be used to “enhance” an image:

Courtesy of Louis Bouchard

Img2Img with vintage videogame art

One interesting use-case has been for “upscaling” videogame artwork from the 80s and early 90s.

Here’s are some examples from reddit user frigis9:

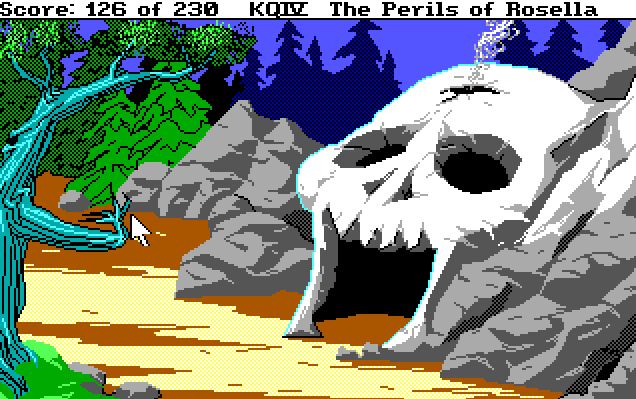

And some screenshots from old Sierra games (courtesy of cosmicr):

| Before | After |

|---|---|

|  |

| Before | After |

|---|---|

|  |

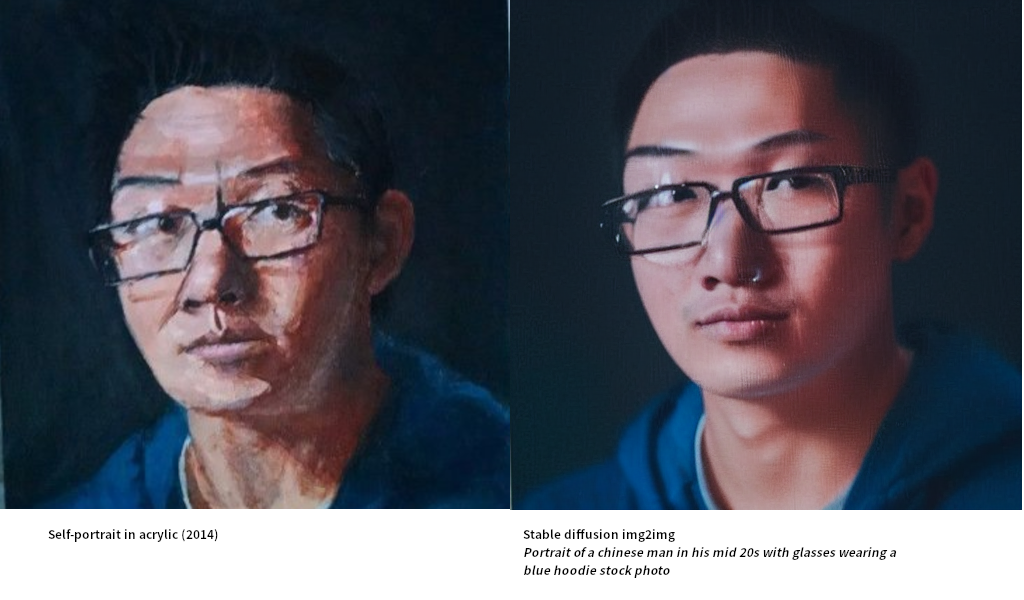

Running Img2Img on a self-portrait

One of the big controvertial implications of AI generated art is what happens to artists. With the capability to generate a virtually unlimited supply of art, will artists and graphic designers simply go out of work?

The ability to condition art generation on existing (even low-quality images) however evokes the question: Could these sorts of tools be used to enhance the work of artists rather than displace them?

A couple of years ago, I got into painting, and I made a self portrait of myself which I still use today for many profile pics. This self-portrait took me upwards of 9 hours to paint in its entirety, using no photographic reference (I literally sat in front of a mirror the entire time). Naturally, this made me think – what happens if I put my own self-portrait into Img2img. Could I enhance this painting? Could I convert it from something which came from the mind’s eye into a photograph, for example? What person do I expect to come out the other end? Since my photo and self-portrait probably isn’t in the Stable-diffusion training set, perhaps this would serve as a good control for “de-painting”!

The original self-portrait:

My first pass at doing this yielded some interesting results: Using a prompt matrix, at high denoising scale of 0.8 and a CFG of ~8, the latent diffusion network has a lot of license to follow the prompt:

Here’s the output of the prompt Asian | man with thick glasses | wearing a blue hoodie | Rembrandt painting, 1630

At high denoising levels, the network is free to deviate from the conditioning image at will. The prompt “Asian” even creates a picture of an asian woman, albeit one wearing a blue collared shirt.

The re-ordered prompt Portrait of | a chinese man in his mid 20s wearing a blue hoodie | rembrandt 1630 at lower denoising scale of 0.6 produces some equally interesting results!

Best result (out of ~50 attempts at parameter tweaking)

To actually get an image that preserved my original facial characteristics, it was necessary to try various denoising levels. Too high and the “self-portrait” turns into a totally different Asian person. Too low, and I end up with a heavily distorted face. The best result is pictured below. It’s quite good albeit has erased any traces of my original haircut.

That actually does look like a photo of me (albeit arguably better looking than I am in real-life).

That actually does look like a photo of me (albeit arguably better looking than I am in real-life).

Final prompt/parameters:

Portrait of an chinese man in his mid 20s with glasses wearing a blue hoodie stock photo

Steps: 50, Sampler: Euler a, CFG scale: 8, Seed: 45, Size: 512x512, Denoising strength: 0.23

Running Img2Img on Historical Paintings

If this depainting worked (after many attempts) on images of myself, perhaps we can try applying the same approach to some historical paintings.

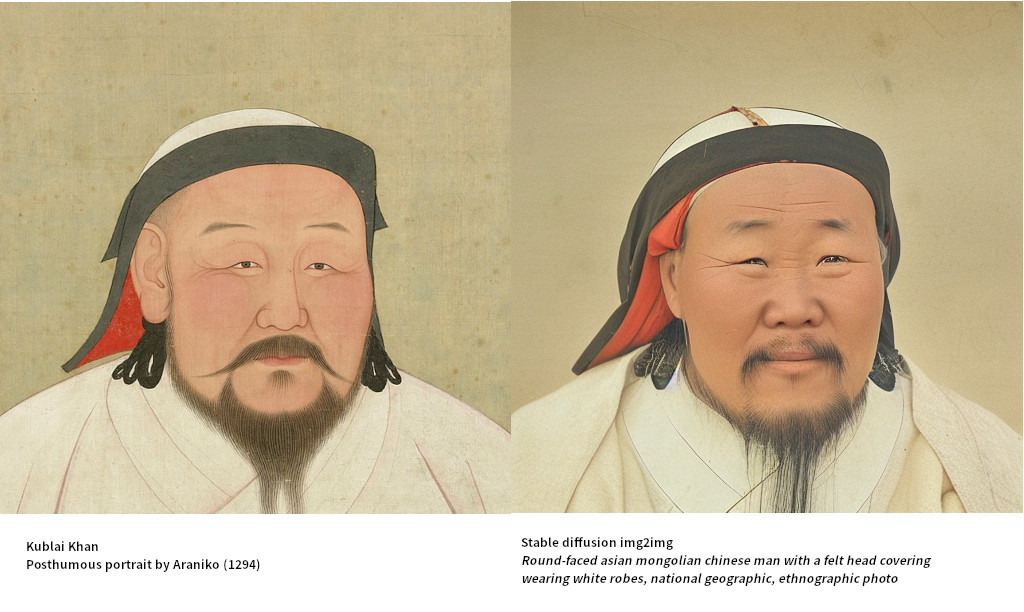

During the height of the Mongol Empire in the 13th century, the Mongol Khans living in China (the Yuan Dynasty in Chinese historiography) commissioned family portraits. The Nepalese artist and astrologer Araniko (also known as Anige/Qooriqosun) was commissioned to paint a posthumous portrait of Kublai Khan after his death in 1294. Using a denoising strength of 0.6, and omitting any mention of the name Kublai Khan yielded some pretty interesting “photos”, the best of which I show here:

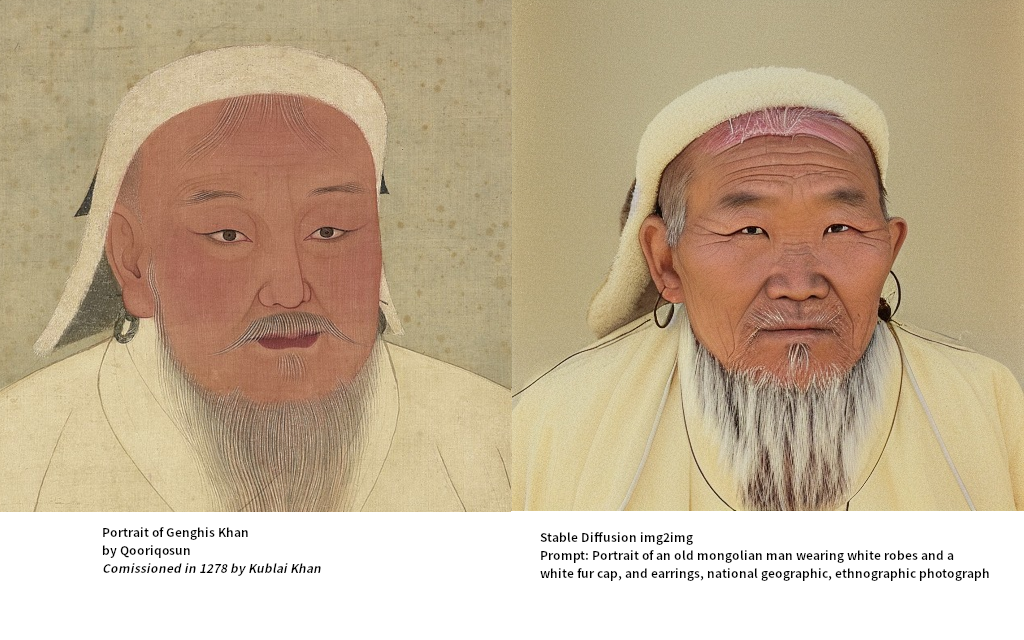

Kublai Khan also commissioned a portrait of his (by then) long-deceased grandfather Genghis Khan via verbal descriptions from people who knew Genghis Khan in life. I believe this is the closest we will ever get to a contemporary image of Genghis Khan. There are a ton of artistic depictions of Genghis Khan on the web that were probably scrapped by stable-diffusion for building the training set, so I explicitly omitted using his name:

At such high denoising strengths needed to yield “photographic results) one starts to wonder how strongly the latent-diffusion model uses “artistic license.

For comparison, the prompt Portrait of an old monoglian man wearing white robes, and a white fur cap, and earrings, national geographic, ethnographic photograph yields pretty a pretty similar looking “generic old mongolian man”.

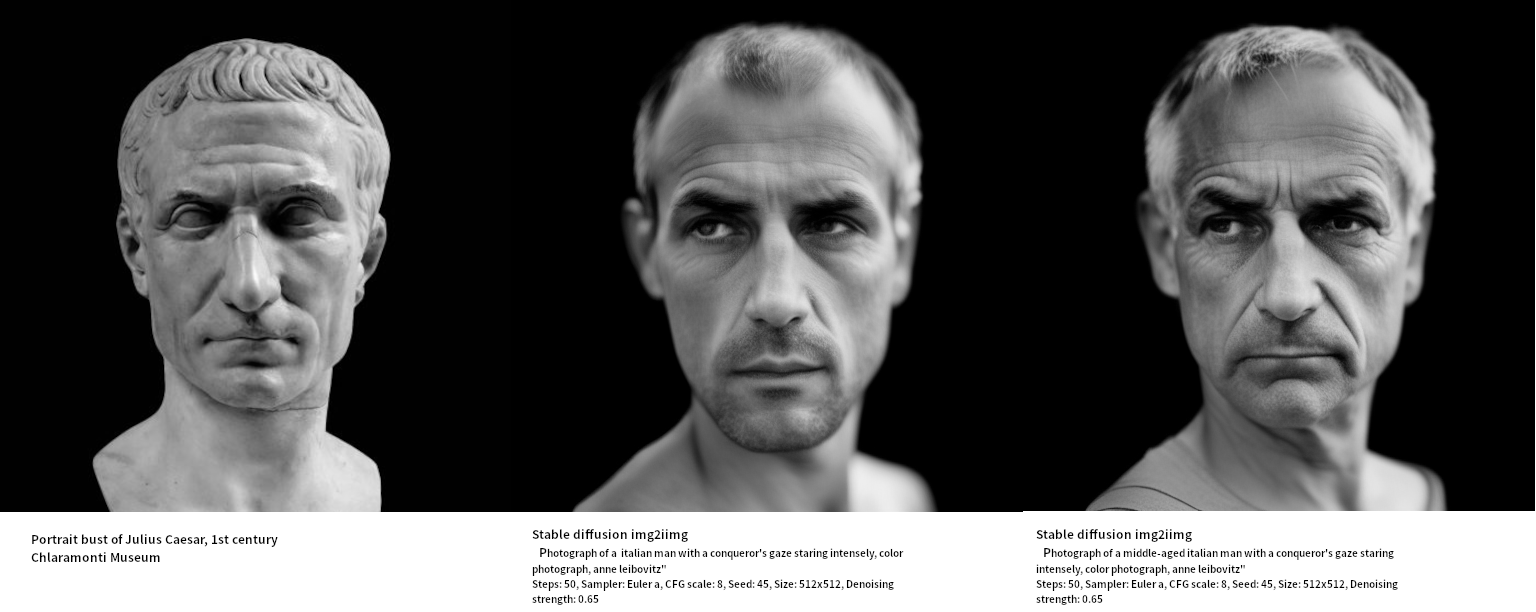

I tried the same exercise on Julius Caesar. There is a bust of Caesar which today sits in the Chiaramonti Museum in Italy. Trying out some prompts at high denoising levels yielded the following (these are cherry-picked outputs of course!):

Photograph of a [middle-aged] italian man with a conqueror's gaze staring intensely, color photograph, anne leibovitz Steps: 50, Sampler: Euler a, CFG scale: 8, Seed: 45, Size: 512x512, Denoising strength: 0.65

Running Img2Img on Comic Art

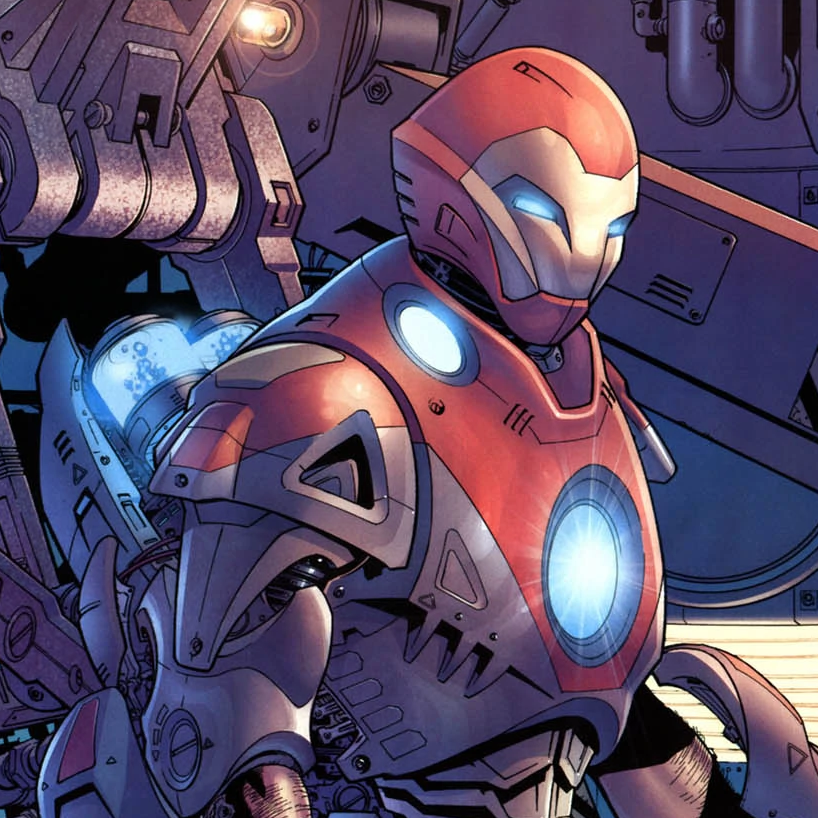

Finally, I tried running img2img on some comic art. The interesting thing about comic book art is that virtually every popular comic book character has some sort of high def cgi or live-action adaptation somewhere at this point.

Back in the early 2000s, before the current explosion in popularity of Marvel properties on the big screen, Marvel Comics attempted to launch an “Ultimate Comics” Universe which involved some interesting redesigns of their characters.

Here is a picture of “Ultimate Iron Man”, the harder-drinking, more hedonistic counterpart to the mainline Marvel Universe’s Iron Man, re-designed by artist Bryan Hitch:

Parameters:

Futuristic red and gold metal suit with glowing blue power source in a room full of futuristic machinery, 3d render, zbrush

denoising_strength: 0.28

sampler_name: k_euler_a

seed: 45

Using the words iron man instead of metal replaces the face mask of the helmet with a much more recognizable “non-ultimate” iron man mask!

Stable-diffusion has a latent encoding of iron man with an understanding of the iron man mask!

Futuristic red and gold *iron man* suit with glowing blue power source in a room full of futuristic machinery, 3d render, zbrush

denoising_strength: 0.28

sampler_name: k_euler_a

seed: 45

Note that higher denoising strength would cause the network to deviate from the image conditioning entirely!

Conclusions:

Stable Diffusion and other image generation AI tools are incredibly powerful, and at low denoising levels, can be used to enhance artwork in ways that were unimaginable just years before. At the same time, it’s readily apparent that there are some things to watch out for when using these types of tools to augment one’s own drawings. Stable Diffusion draws from a huge corpus of images and has internal representations of a lot of concepts ranging from “Old Mongolian Man” to “Iron Man”.

There is clearly a lot of room to develop the image conditioning aspects of these types of models to become more powerful tools for artists. Perhaps one day (likely very soon), we will see a lot more artists starting off with quick sketches, and using latent diffusion models to produce the final product with much quicker turnaround time!

Related Posts

Why Big Tech Wants to Make AI Cost Nothing

Earlier this week, Meta both open sourced and released the model weights for Llama 3.1, an extraordinarily powerful large language model (LLM) which is competitive with the best of what Open AI’s ChatGPT and Anthropic’s Claude can offer.

Read moreHost Your Own CoPilot

GitHub Co-pilot is a fantastic tool. However, it along with some of its other enterprise-grade alternatives such as SourceGraph Cody and Amazon Code Whisperer has a number of rather annoying downsides.

Read moreAll the Activation Functions

Recently, I embarked on an informal literature review of the advances in Deep Learning over the past 5 years, and one thing that struck me was the proliferation of activation functions over the past decade.

Read more